Sign Up for Our

Updates

Machine learning holds the potential to revolutionise the provision of healthcare, with the rapid routine testing, democratisation of access and real-time data as care is delivered.

To achieve these benefits, users of the health service must engage fully and honestly with the service. For this to happen, users must trust that the provider is holding their best health interests above all other concerns.

This trust must be earned by building a trustworthy platform from the ground up. Some thought leaders have flagged potential issues around these pillars of trust;

- Representation in data sets

- Conflicting commercial interests

- Difficulty deciphering how deep neural networks make decisions

Let’s explore some examples of each of these challenges below.

Representation in datasets

Machine vision is one of the prominent and early wins in the health AI space. Its advantage over a human observer lies in its capability to learn from millions of tagged image “case studies”, and under good lighting, superior colour differentiation and edge detection. These properties allow a well trained AI to spot skin lesions, discolouration, and even texture. A further advantage of an AI lies in its perfect recall over months and years, the progression of a specific feature.

However, no matter how good the algorithm, machine learning models are fundamentally limited by the data sets used to train them. Like human physicians, AI is only as good as the training it receives.

The human equivalent of this was recently highlighted by Malone Mukwende, in his handbook “Mind the Gap”, which gives example pictures of how signs of underlying disease present differently on darker skin, a fact not addressed in enough detail in much of existing medical literature.

This example highlights the fact smaller communities likely suffer from under-representation in data used to train AI models.

Simple multiplication has been suggested as a remedy for this under-representation. However, skewing the model in such a manner is likely to add to its inaccuracy. A better solution would be an active effort to add comprehensively tagged data sets representing conditions as they appear on underserved communities to build a branch of the AI model, which is more competent in its diagnosis.

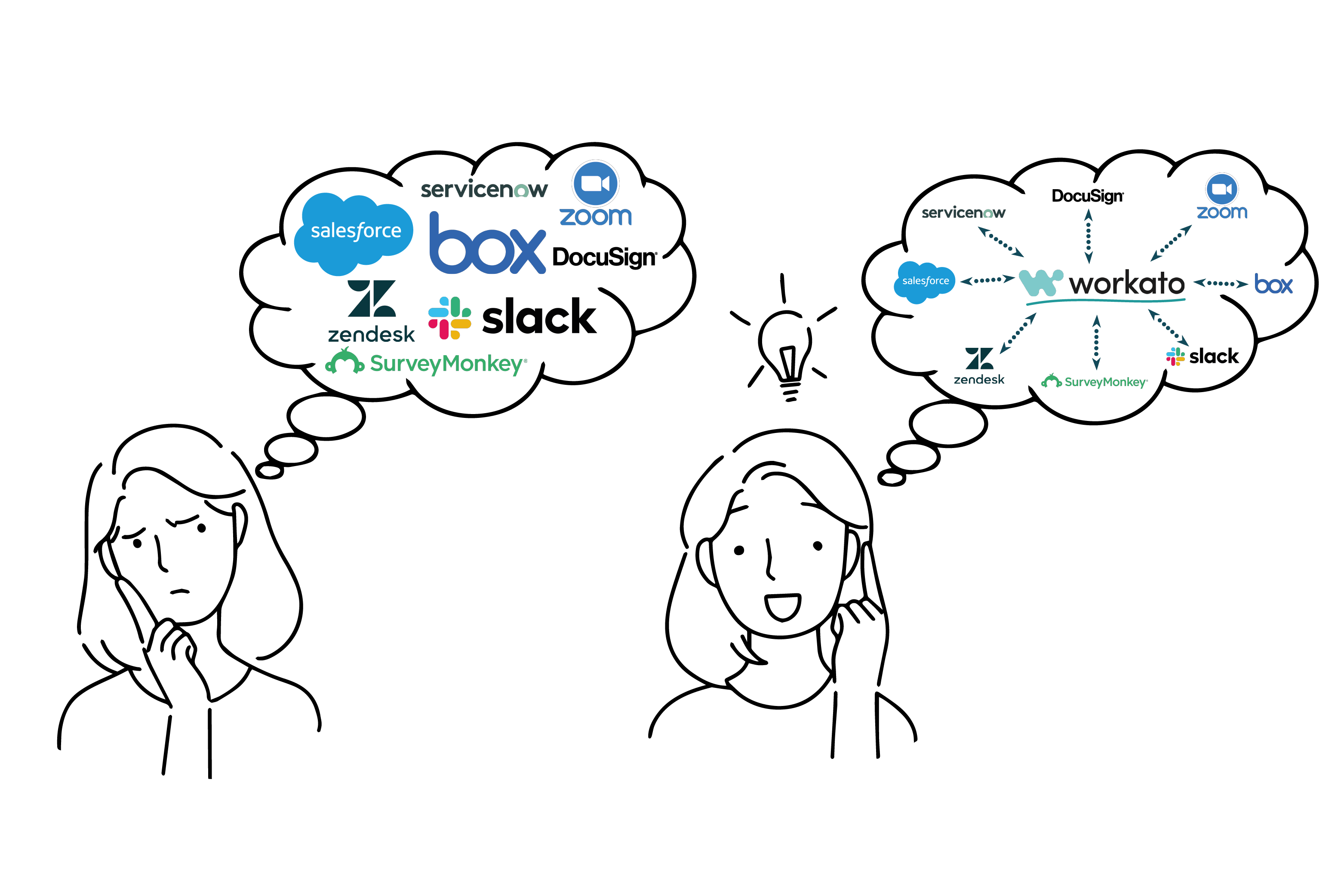

Conflicting commercial interests

The data sets used to train a healthy AI will invariably be massive. The scale that data processing must be carried out on will likely necessitate third-party providers of cloud compute capacity. This third party involvement with commercial entities raises the issue of data ownership and control. Many of the leading cloud service providers primary business model revolves around retailing and/or advertising. The large quantities of confidential personal data required to extract meaningful health insights are by their nature extremely valuable to any company selling products or information to tertiary parties. The providers of this infrastructure will have to be vetted thoroughly and or bound to carefully worded contracts to ensure that patient data is not abused. Alternatively, providers specialising in scientific GPU compute could be considered, as their lack of competing interest may provide for a more reliable partner.

Difficulty deciphering how deep neural networks make decisions

The more hidden layers a deep neural network consists of, the more accurate, comprehensive and flexible it can become over time. The downside to this is that as hidden layer count increases, it becomes increasingly difficult to interrogate the model and discover which factors it has given what rating to arrive at its decision. While various organisations have worked to develop meta-analysis tools to automate the production of a more decipherable representation of the model, most modern AI models still operate as a black box.

This difficulty may seem insurmountable; however, I believe that this problem can be sidestepped with appropriate monitoring and validation. These tools should be used to augment the capacity of a health professional. As such, Ai findings can be continuously validated both by the specialist's opinion and feedback from the patient outcomes.

There is much more to be said on each of these points, and we are likely to face many more challenges as we realise the potential of AI enhanced healthcare. However, suppose we build a platform which addresses these core trust building challenges. In that case, we will achieve comprehensive community buy-in, resulting in more accurate modelling and better health for all.

.jpg)